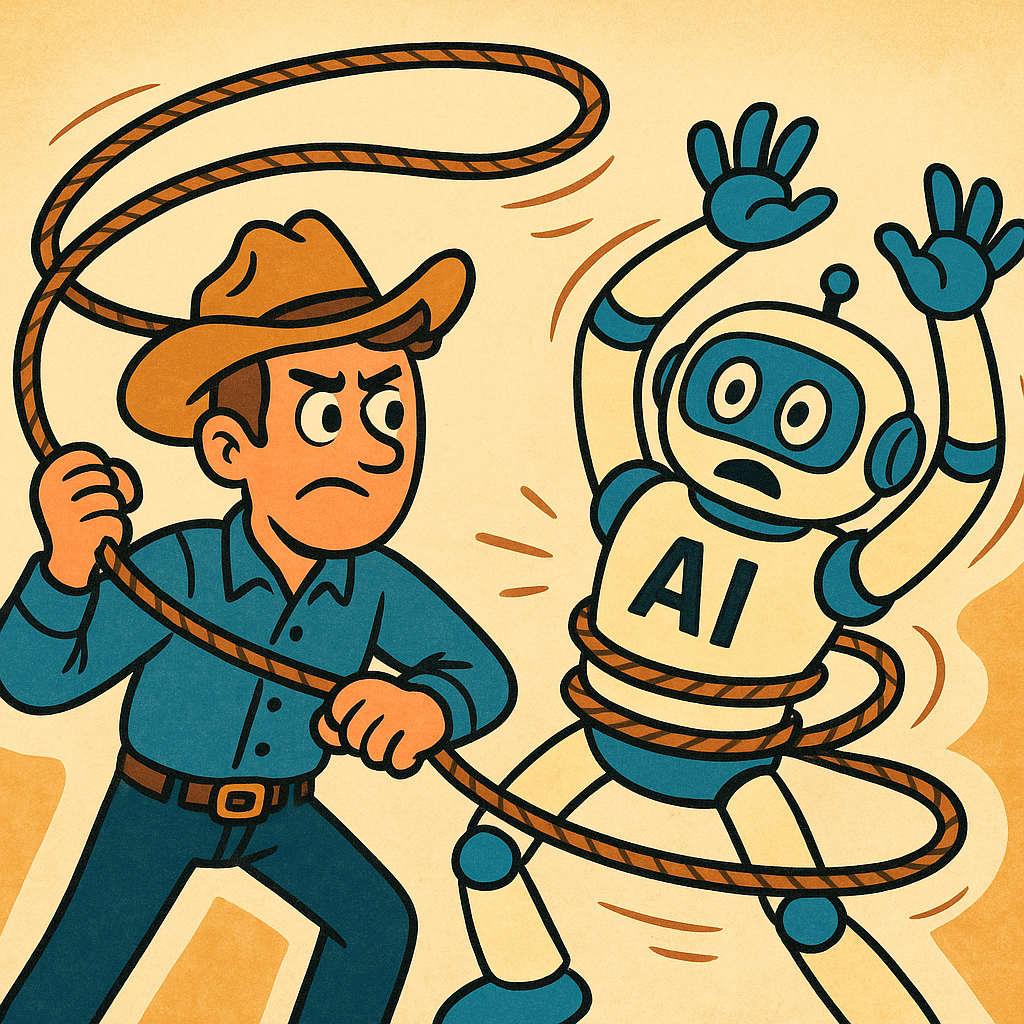

Wrangling AI with MCP: A Journey into Human Control

How I stopped worrying and built a system I could trust

A few months ago, I was staring at a blank blog post draft, cursor blinking, coffee cooling. I had ideas. I even had some outlines buried in Notion. But the overhead of writing—the formatting, the remembering, the switching between tools—was getting in the way of actually thinking. I didn’t need inspiration. I needed infrastructure. Not something to replace me, but something that made it easier for me to show up.

Like a lot of people working at the intersection of product, engineering, and AI, I’ve been watching the wave of “intelligent assistants” and “AI copilots” crest into something less theoretical and more operational. The tools are getting better, yes—but they’re also getting blurrier. Capable, fast, and increasingly autonomous, but hard to reason about, hard to inspect, and easy to over-delegate. You can give up more control than you realize without meaning to. What I wanted was something a little more boring: a predictable, inspectable layer of control over what the AI was allowed to do. That’s how I ended up building around MCP.

For the uninitiated, MCP—short for Model Context Protocol—is a way to structure how AI models interface with your systems and workflows. At a glance, it looks like plumbing: a schema, some handlers, a well-defined interface for passing tasks back and forth between a local process and a remote model. But if you step back, it’s more than that. It’s a conceptual framework for defining intent, boundaries, and accountability in AI-assisted systems. It’s a reminder that context isn’t metadata—it’s governance.

There is a very long list of MCP servers on Github of varying complexity that you can try right now. Alternately, Smithery.ai is offering a more repository-like approach.

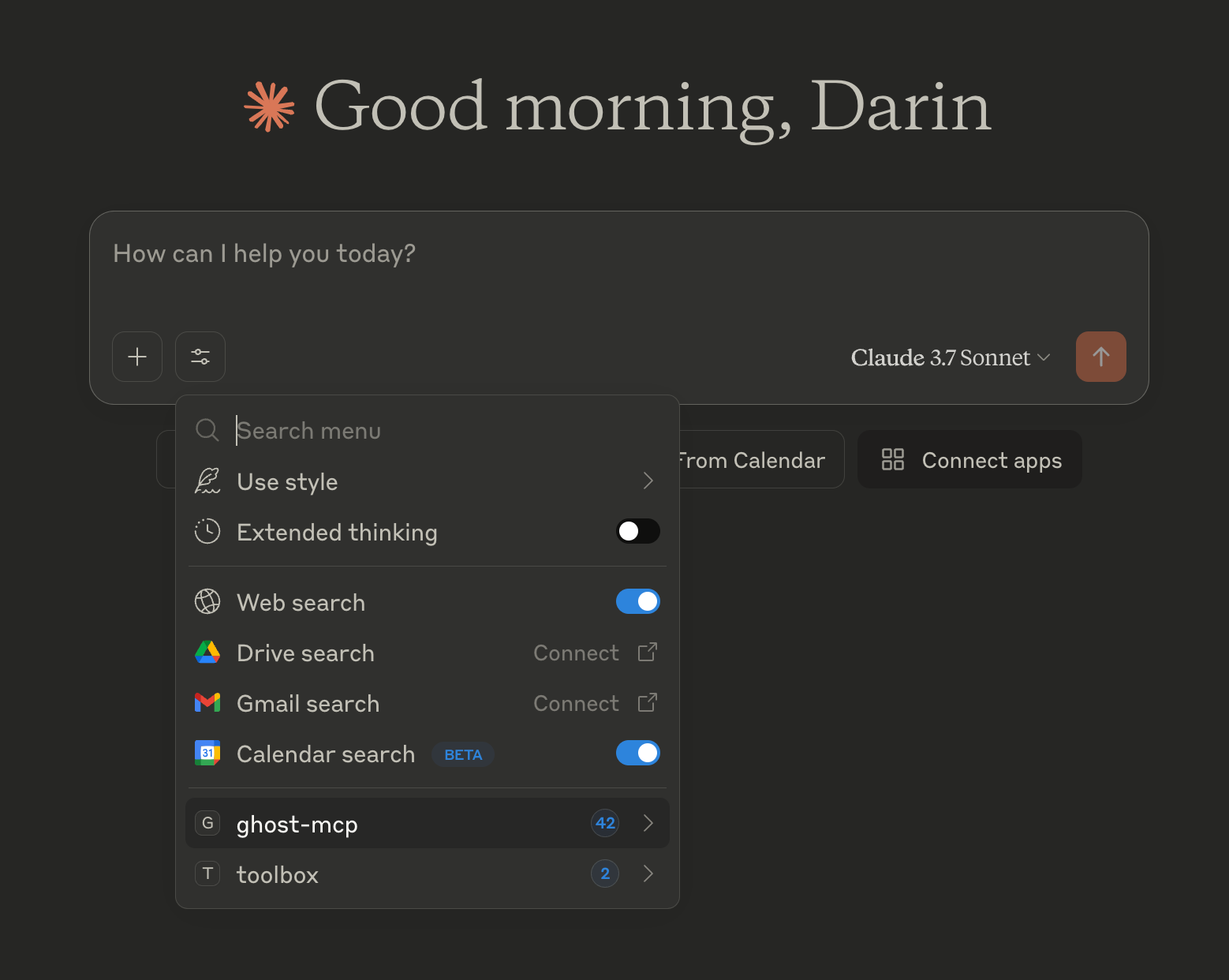

I decided to test it out by building a lightweight automation layer around my personal blog. I was already using Ghost as a CMS, so I forked Ghost-MCP, an open-source project that connects Ghost with an MCP server. The goal was simple: I wanted to use an LLM to help me brainstorm topics, draft content, and post when I was ready—without handing over creative control or sacrificing editorial judgment.

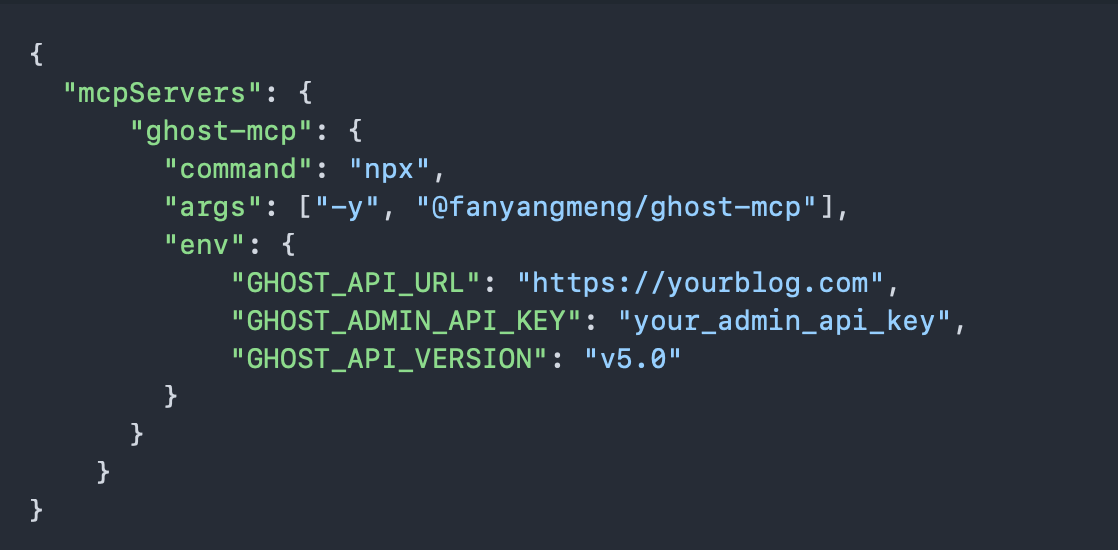

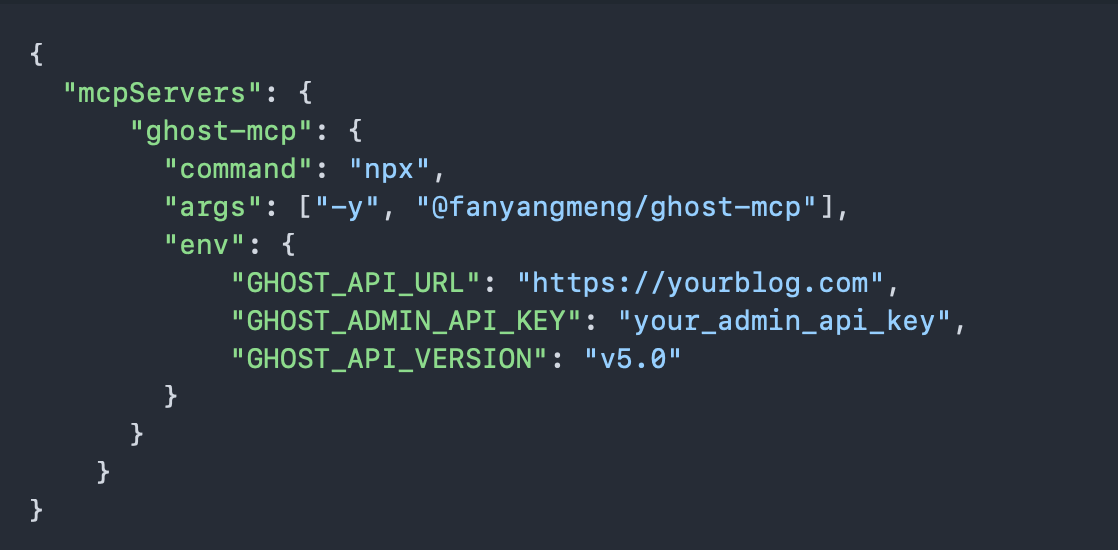

The setup process was straightforward. Since I already have NPM installed, I simply dropped a snippet into a config file like it was Docker or something. Then I was instantly authenticated against my blog using the Ghost Admin API.

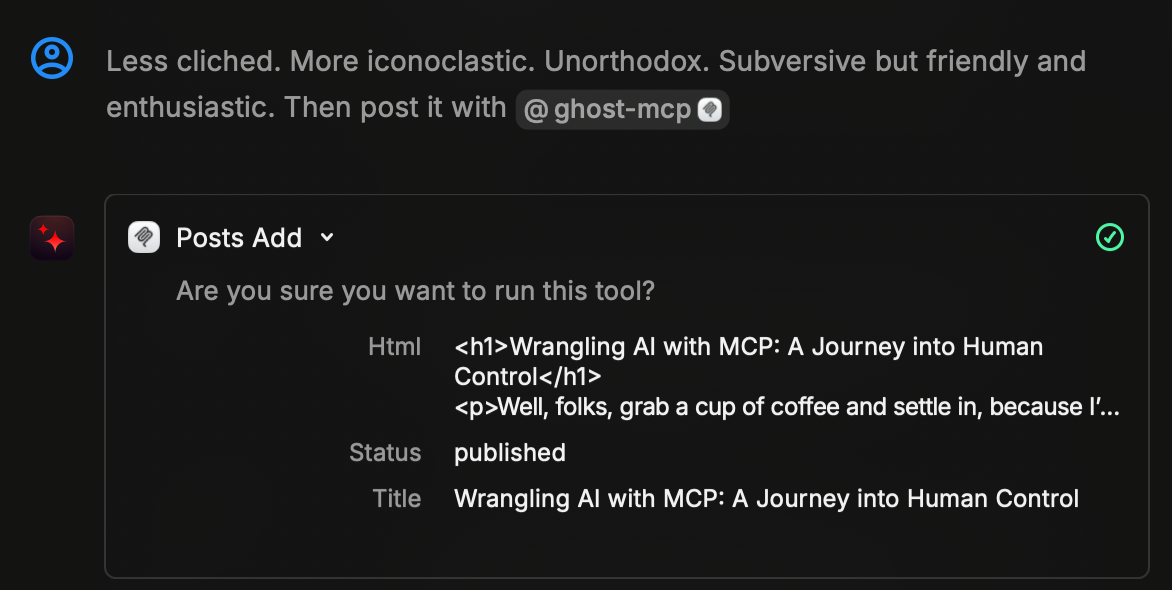

From there, I scoped a few specific tasks for the LLM: generate post ideas, write a first draft based on a given outline, suggest titles, and summarize old posts. All outputs were saved as drafts until I approved them. Nothing went live without my review. This wasn’t fire-and-forget automation—it was a structured collaboration with clearly defined roles.

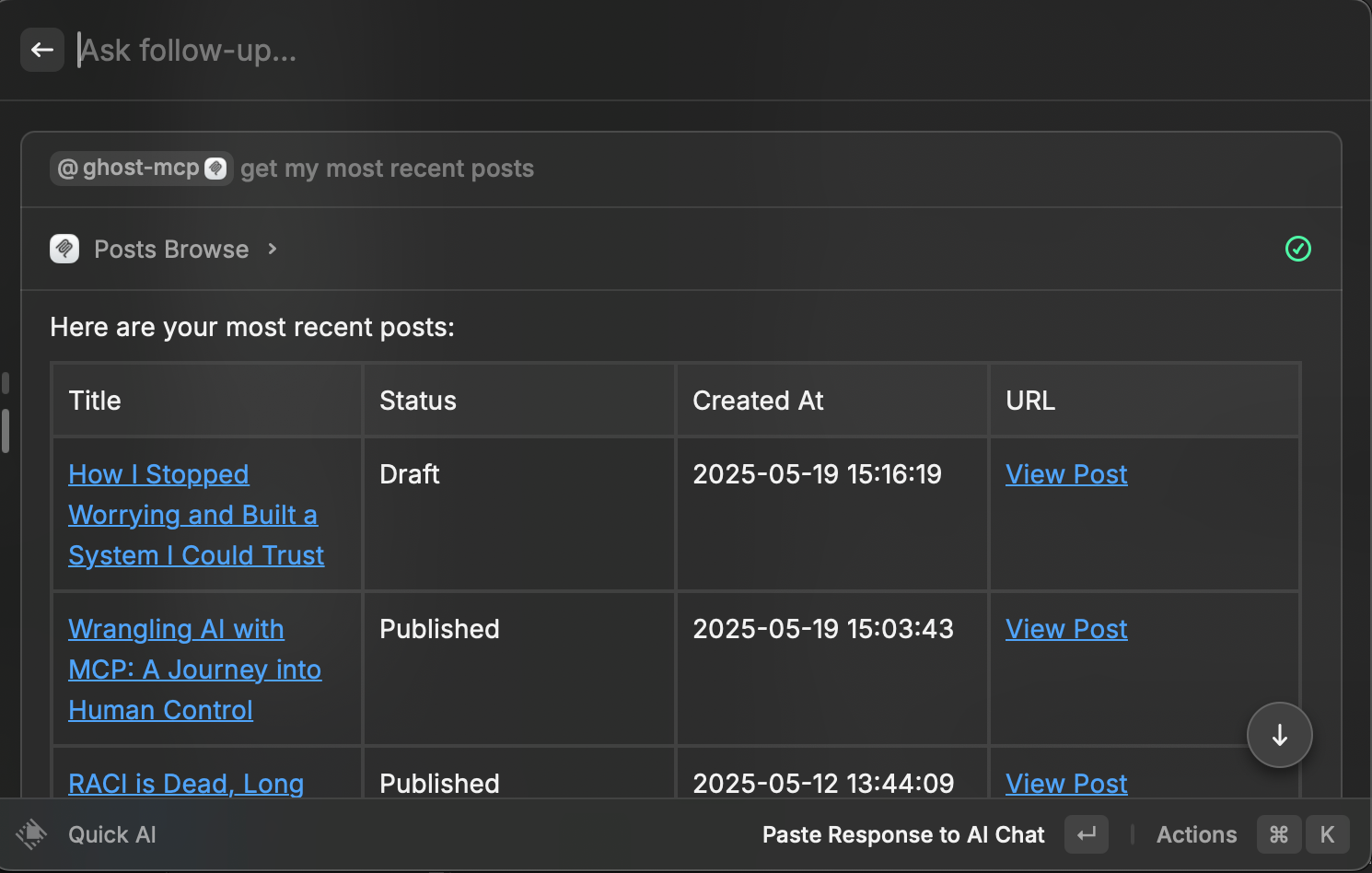

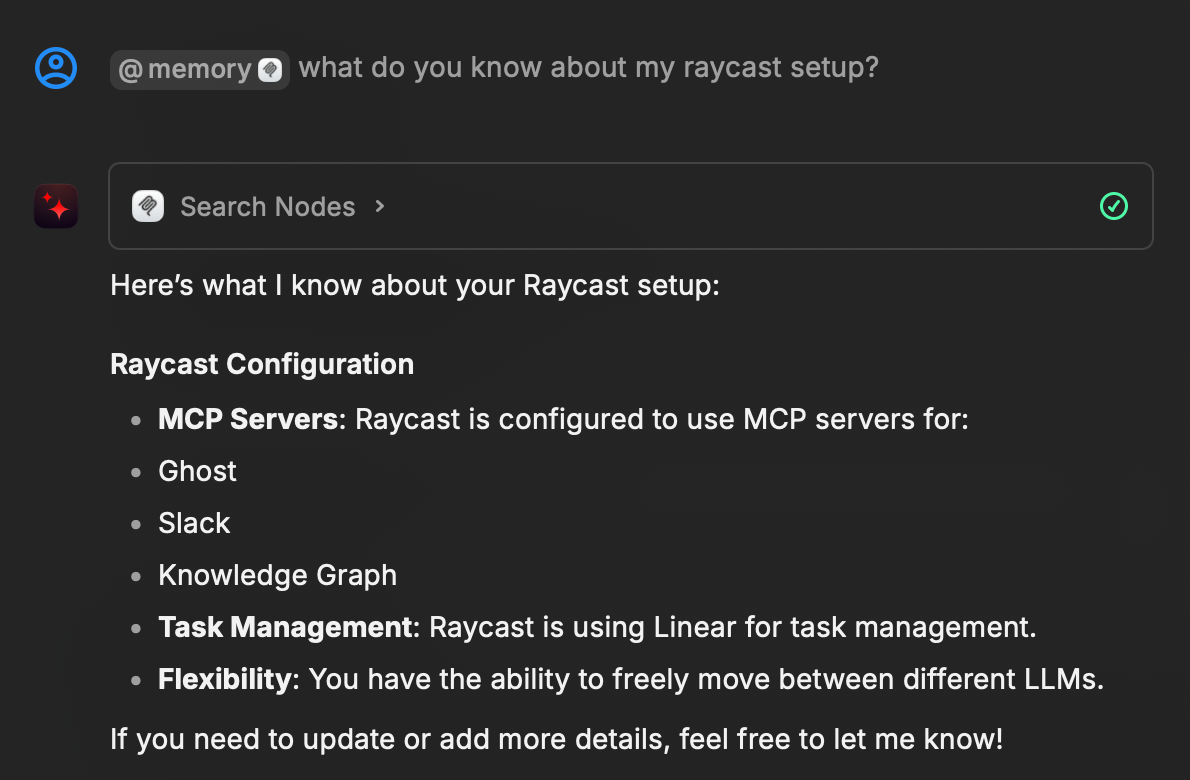

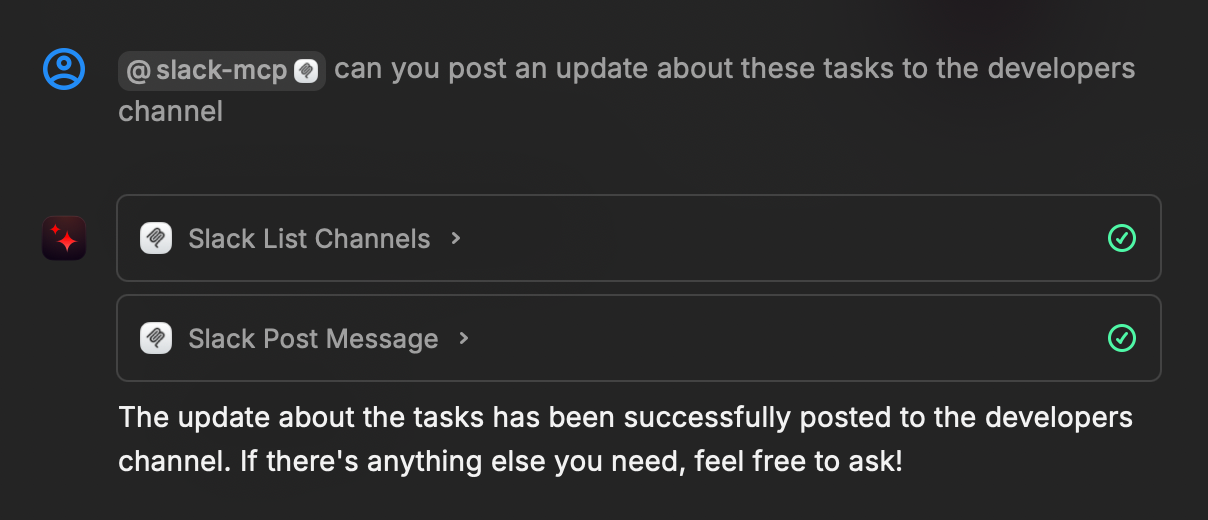

To make interaction more fluid, I added Raycast commands. This let me trigger LLM requests with a keystroke: generate a post, draft a tweet thread, pull highlights from a recent article. No browser tabs. No context switching. The interface faded into the background, and what remained was a reliable loop: prompt, review, revise, publish.

The result wasn’t magic. It was just useful. I could move faster without lowering standards. I spent less time wrangling markdown and more time deciding what I actually wanted to say. And because the tasks were well-bounded, the model never tried to outsmart me or go off-script. It did what I asked it to do—and nothing more.

That distinction matters. Most AI tooling today is built to maximize capability. It’ll generate paragraphs, write code, translate documents, summarize meetings. But capability without constraints tends to drift. Without a clear sense of scope or oversight, even good output starts to feel alien—like something that came from your brain but doesn’t quite sound like you. MCP brings the boundaries back into focus. It lets you work with AI on your own terms, not just with whatever defaults the API ships with.

There’s a larger point here, one that extends well beyond blogging. As we integrate more AI into our workflows, it’s easy to fall into a kind of quiet abdication. You let the model suggest the text, the structure, the headline, the strategy. The risk isn’t just bad content. The risk is disconnection. You stop being the author and start becoming the approver, if you’re lucky. If you’re not paying attention, you’re not even that.

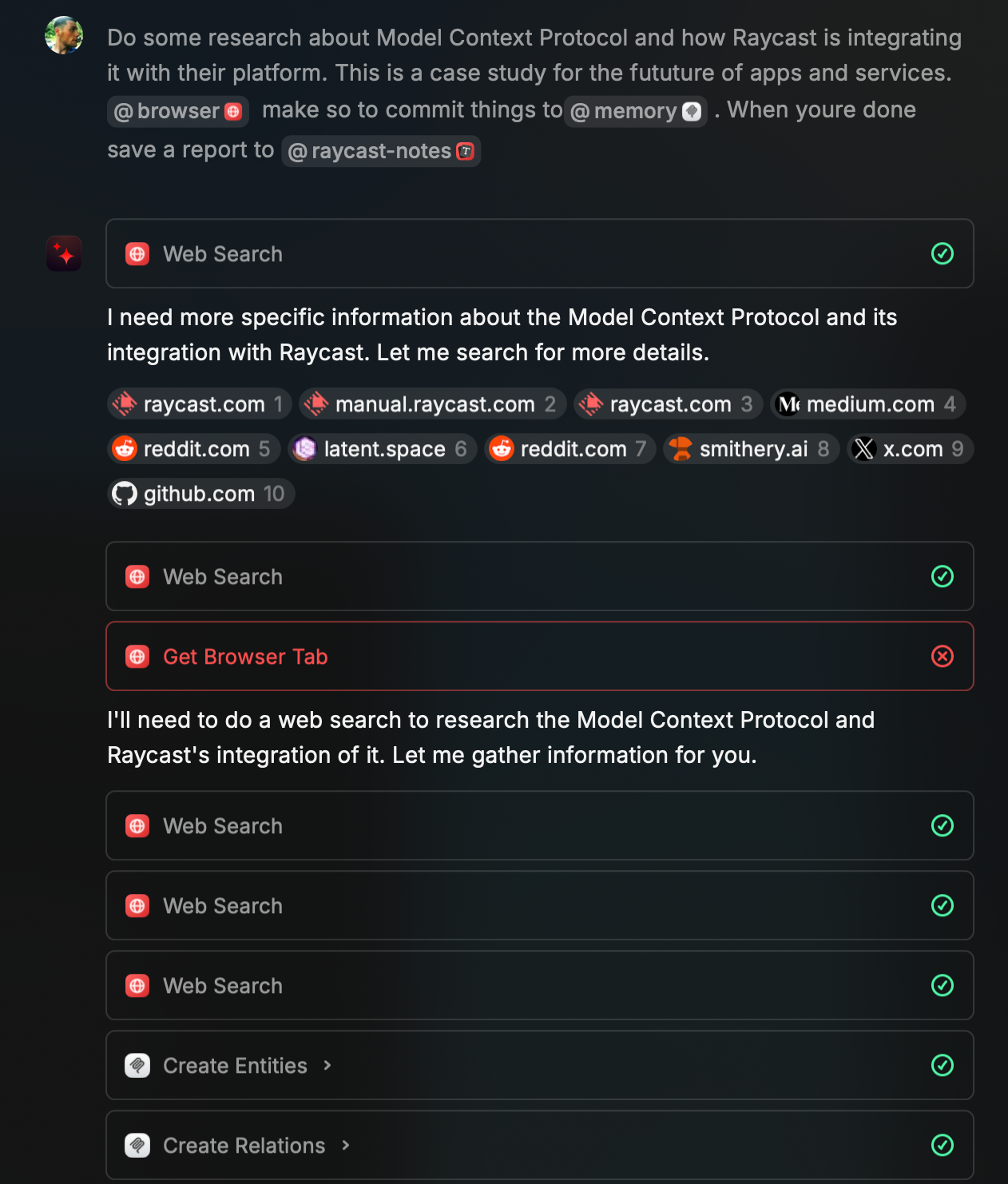

MCP is a counterweight to that drift. It forces you to define the system. It makes you specify what the model should know, what it should be able to do, and when a human needs to intervene. It’s not an all-in-one tool. It’s not opinionated. But it is composable, and more importantly, it’s inspectable. You can see exactly what context the model had, how it made a decision, and what your role was in the loop.

This whole experiment started as a way to reduce friction in a very specific part of my creative process. But it ended up clarifying something broader: my relationship to AI shouldn’t be passive. It should be designed. And if I don’t define the boundaries, someone—or something—else will.

We don’t need AI to be smarter. We need it to be more accountable. And that starts with us being clear about what we’re delegating, why we’re delegating it, and how we make sure we’re still responsible for the outcome. MCP doesn’t solve that for you. But it gives you a place to start.